Introduction

The official Gravity Forms Moderation Add-On utilizes the power of the Perspective API, provided by Jigsaw and Google, in order to use its machine learning algorithms to analyze your form submissions, and trap and filter undesirable language and inputs.

Installing this add-on for your Gravity Forms allows you to set filterable attributes, a toxicity threshold, and even custom word lists for each of your forms to help you filter out unwanted inputs.

Prerequisites

- Gravity Forms 2.7 or later.

- Perspective API key

- Installed add-on.

Settings

The Moderation Add-On includes settings at the add-on level, as well as settings that can be established for every form. Form setting defaults are inherited from the global settings, but can be customized for each form. Once you have customized a form and saved its Moderation settings, that form will no longer inherit any changes made to the global default settings.

Refer to this article for add-on setup, and refer to this Form Settings reference list for a description of each of the form level settings that are available.

How It Works

Operation and Scoring

You can turn the Moderation on or off for each form, using a toggle within the form settings. While it is on, all form inputs received are encoded and passed securely to the Perspective API. The API returns a score between 0 (not toxic) and 1 (extremely toxic) for each of the various attributes that are being evaluated. From all those returned scores, we calculate the overall Toxicity Score for that entry as the highest score returned across the selected attributes.

For example, if your form submissions are being evaluated for toxicity, insult and profanity, then a score equal to or exceeding your form threshold in any of those attributes will result in the entry being marked as toxic.

For more information on the way attributes are classified by the Perspective API, view their documentation.

Positive Matches

If any score meets or exceeds the established threshold, the entry will be identified as toxic. It will then be treated based on the action setting you have specified. This can include relocating the entry into a separate area (see Toxic Entries box below), or immediately deleting the entry.

Language Support

The Perspective API works in a number of languages, although not all filter attributes may be available for evaluation in all languages. See this third party developer article for more information. The attribute list presented in the form settings are based on the APIs English language support. As such, do note that a filterable attribute being listed for you does not mean that that category is supported in the language your site is using.

Toxic Entries Box

Installing this add-on will create a new category on the Entries list page, titled Toxic. If you have selected the form setting to filter toxic entries into that box then this is where you will find them.

When viewing the Toxic box in list view or entry detail view, inputs will be blurred to protect the reader from the possibly toxic content. To review the entry, click the eye icon on the entry detail page to reveal all entry text.

Notifications and Confirmations

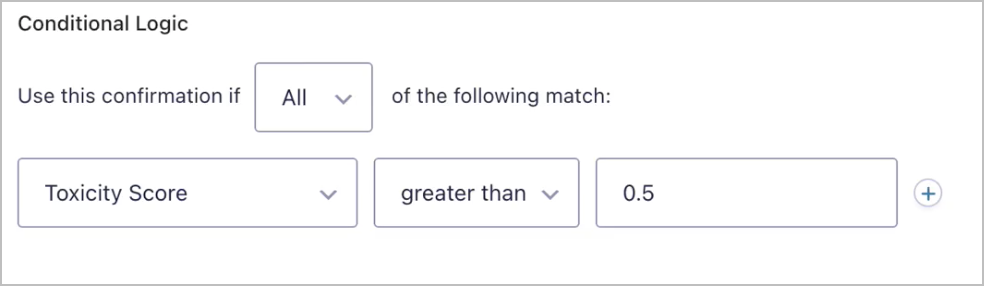

The Toxicity Score of an entry can be used within conditional logic in order to take specific actions based on a calculation you define.

For example, you may want to send notifications to a special moderator mailbox in the case of exceptionally toxic entries. Or perhaps you want to display a confirmation message that says your submission has been deleted due to content, or perhaps is “under review for toxic content that may result in platform action” if you have choose to relocate to the Toxic entries box.

Entry Exporting

By default, entries marked as Toxic will not be exported with the entry export mechanism. To override that default, you can set a conditional logic criteria with your export job that identifies the Toxicity Score conditions that you are happy to export.